The wealth of information about our planet generated by satellites circulating the Earth is remarkable, informative and powerful, but not without its challenges. In an article recently published in Reviews in Geophysics, Loew at al. [2017] highlighted the inherent uncertainties of satellite data and discussed different methods for validation. The editor asked the authors to explain what validation means, why it is necessary, why it is so complicated, and what could be improved.

What information and insights do satellites provide about Earth?

Satellites have become a cornerstone of Earth observation (EO). From the land and sea surface up to the upper atmosphere, they provide an (often) daily, long-term, global view on a wide variety of key properties pertaining to the weather, climate, air quality, biodiversity, land use, hazard mitigation, resources management and more. They are instrumental in advancing our understanding of the changing environment, and in helping us to identify, monitor, and address some great societal challenges of our times. Their importance is reflected in the central role satellites play today in the environmental monitoring programs developed by public authorities and international organizations across the globe, such as the World Meteorological Organization’s Global Observing System, the European Union’s Copernicus Program, and NASA’s Earth Observing System.

What are some of the challenges with data derived from satellites?

While an “in situ” measurement yields information in the immediate environment of the instrument, satellite data are of remote sensing nature, i.e., they “sense” information on objects from distances spanning from several hundreds of kilometers to tens of thousands of kilometers. They do not actually measure the target quantity, but rather reflected (solar) or thermally emitted radiation from which the target quantity can be retrieved. The retrieval of the actual target quantity is then a complex process, requiring assumptions and auxiliary information. Consequently, satellite data are affected by many known and unknown error sources leading to uncertainty on the final data product, which is often difficult to assess. This uncertainty can be further amplified by instrumental degradation in the harsh space environment during the mission’s lifetime.

Furthermore, the construction of climate data records covering at least several decades, from individual satellite data records (each spanning only a few years to a decade on average) is affected by different systematic errors in each satellite record. This can potentially cause artefacts within the record, hampering for instance our ability to assess accurately early signs of recovery of the ozone layer, or to detect climate change signatures.

For all these reasons, satellite data need to be confronted regularly with independent reference measurements to check the quality of the measurements, the reported uncertainties, and the long-term stability against the requirements of the data users. This process is called validation.

Why is “validation” a particular challenge?

While the general aim of validation is clear, the practical implementation requires answers to a large set of questions.

What constitutes a suitable reference measurement? Is the spatio-temporal scale of a satellite and reference measurement sufficiently comparable, and if not, how can we overcome scale mismatch?

Are validation results obtained at specific ground sites representative globally? Which metrics best gauge the quality of the data?

Against which user requirements do we assess the fitness-for-purpose of the data? Which terminology do we use (for example, the ubiquitous confusion between error and uncertainty)?

Different EO communities have come up with different, sometimes very specific but more often complementary, answers to these questions. This implies that an effort to harmonize and share approaches across the communities could be highly beneficial.

What are some of the different approaches to validation?

The baseline approach for most EO communities is a pairwise comparison between satellite and ground-based reference measurements, and further statistical analysis on the resulting differences. These statistics include basic metrics such as: the mean difference as a proxy of the combined systematic error in the data; root-mean-square error or standard deviation of the differences as a proxy of the combined random error in the data; and linear trend determination on de-seasonalized differences as a proxy of instrumental degradation effects.

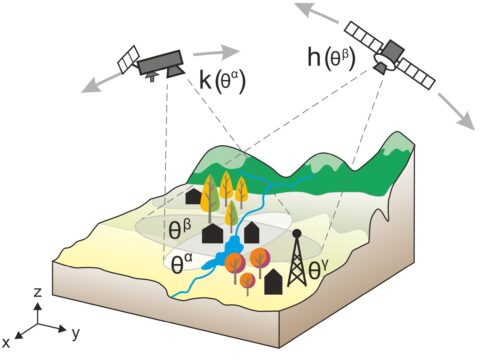

A critical issue that has to be dealt with in most cases is the so-called co-location mismatch: satellite and reference instruments don’t usually measure at the exact same location and time, nor do they offer the same spatial and temporal resolution. Various methods have been elaborated to minimize and/or quantify co-location mismatch. For example, beyond pairwise comparisons, so-called triple co-locations are used, whereby the inclusion of a third co-located dataset allows for assessing co-location mismatches, but also other aspects of uncertainty. When direct validation of the satellite-observed geophysical variable is difficult, tight links with other geophysical variables can be exploited in an indirect validation scheme, for instance using precipitation reference measurements to validate satellite data on soil moisture, or the other way round.

What do you consider best practices in the validation of Earth observation data?

First of all, we advocate the use of the standard terminology and methodology devised by the metrology (i.e. measurement science) and normalization community, such as the International Vocabulary of Metrology and the Guide to the Expression of Uncertainty in Measurement . Moreover, we stress the importance of full metrological traceability of the reference measurements, meaning that they are linked, through calibrations, inter-comparisons and detailed processing model descriptions, to the Système International (SI), or at least to community-agreed standards. Special care is to be given to the uncertainties of the different data sets, separating systematic and random components obtained preferably through detailed uncertainty propagation, and to co-location mismatch issues that might affect the data comparisons.

Regarding the validation procedures themselves, the application of existing community-agreed protocols is encouraged. Also, it is important to realize that EO validation is an ongoing, hot research topic, and that for a list of parameters the writing of protocols still requires supporting research. We acknowledge ongoing initiatives of the Committee on Earth Observation Satellites Working Group on Calibration and Validation aimed at establishing generic and specific “validation good practice guidelines” for all families of EO instruments and data.

Where are further efforts needed to improve the performance of validation work?

While validation needs are increasingly being addressed by the (space) agencies themselves, driving dedicated research and providing funding for baseline activities, further advances can still be recommended.

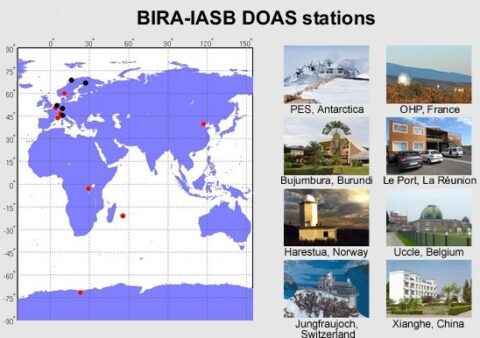

An essential need is to guarantee fast and sustained availability of traceable reference measurements. Satellite-oriented support of essential ground (network) measurement capabilities should be a continuous focal point for space agencies and for providers of satellite-based EO services. Collection of reference data and validation analysis facilities can be further operationalized. Meanwhile, the user community needs to further refine their requirements, differentiating for the many components that make up requirements on the overall accuracy and stability.

Finally, the validation community is invited to get on with the establishment and publication of best practices, to share their tools as open-source software with associated documentation, and to embark on joint developments of methods, harmonized tools and infrastructures.

—Tijl Verhoelst, Royal Belgian Institute for Space Aeronomy, Belgium; email: [email protected], with contributions from co-authors.

We dedicate this piece to our colleague, Alexander Loew, the first author of our review article, who died tragically shortly after the manuscript was accepted for publication.

Citation:

Verhoelst, T. (2017), Examining our eyes in the sky, Eos, 98, https://doi.org/10.1029/2018EO088011. Published on 07 December 2017.

Text © 2017. The authors. CC BY-NC-ND 3.0

Except where otherwise noted, images are subject to copyright. Any reuse without express permission from the copyright owner is prohibited.