Students may have little choice but to blindly tinker with code until things seem to work.

Have you ever watched a student struggle to perform a seemingly straightforward analytical procedure? It may be a routine preprocessing step, like detrending a time series or removing a seasonal cycle, but somehow the simple operations can stymie a student for weeks. It’s tempting to assume that young people with their short attention spans are unwilling or unable to think through the task at hand, but a closer look suggests that students may have little choice but to blindly tinker with code until things seem to work.

In Earth science, graduate students typically enter without much coding experience, they are often new to the analytical principles of their subdiscipline, and they have not yet developed a guiding intuition for the geophysical processes at the center of their work. They are thrust into research and asked to implement methods they’ve never seen before, and in contrast to other fields of study, they find very few opportunities to check their work.

Where classical physics textbooks and online forums abound with derivations of the wave equation, in the Earth sciences it can be difficult to verify that even common climate indices like the El Niño–Southern Oscillation have been calculated correctly. Without a way to be sure the elementary steps are being performed properly and without the sure footing of a common framework in which to perform these steps, how can we expect students to make headway on the bigger, multidisciplinary problems?

A Global Problem

The issues here affect not just students. Every established researcher in the Earth sciences has certainly devoted an afternoon or a week or a month to writing low-level code to perform a common task and has then found no clear means of validating the code. What a terribly inefficient path toward progress this is, as highly skilled scientists spend a great deal of time writing code that has been written before, rather than moving forward. But worse than simply wasting time, our way of doing business has ensured that scripting typos inevitably go undetected, leading to publication of incorrect findings that at best get caught by follow-on studies or at worst go unidentified and perpetually misguide science.

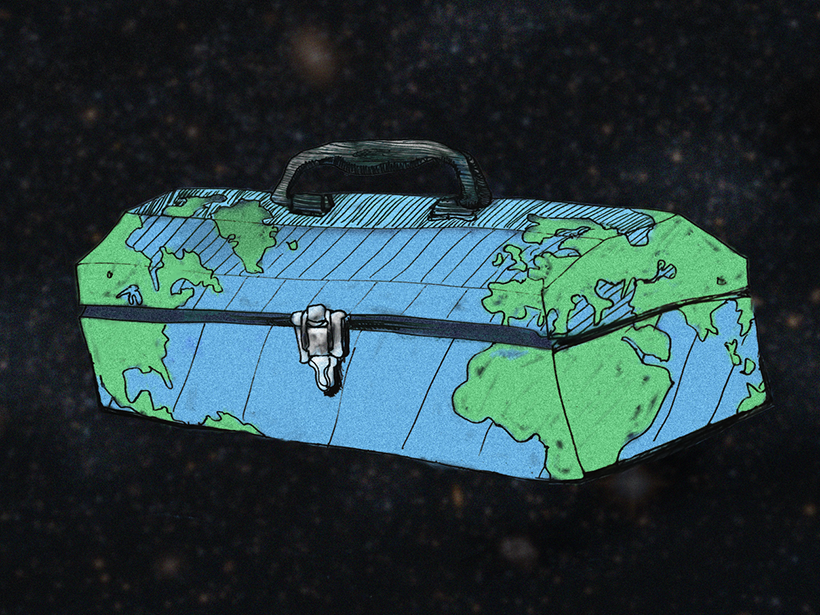

We Need Better Toolboxes

For efficiency, accuracy, and transparency in Earth science, we need to develop and adopt standard sets of well-tested tools for all our analyses.

For efficiency, accuracy, and transparency in Earth science, we need to develop and adopt standard sets of well-tested tools for all our analyses. This will require investing in the development of comprehensive toolboxes that the community can use for a wide range of problems.

The toolboxes we need must be holistically designed—not just stand-alone scripts for performing specific analyses, but entire toolboxes of functions designed to work together in streamlined, repeatable workflows. Toolbox documentation should describe the steps of analysis in a pedagogical, narrative fashion, with example data that users can load to follow along with and understand the documentation. If we design our toolboxes holistically, they will naturally become starting points for students to learn and will serve as vehicles for collaboration among established scientists, providing consistent, reliable, trusted results that are easy to cite in scientific journals.

For example, a common method of obtaining the El Niño–Southern Oscillation index requires spatially averaging sea surface temperatures within a given geographic region, calculating anomalies by removing the seasonal cycle and the mean of the resulting time series, and then smoothing the remaining anomalies with a 5-month moving average. At first glance, each of these steps may seem trivial, yet their implementation can quickly become unwieldy. For example, a spatial average of sea surface temperatures requires accounting for the variable grid cell size in a regularly spaced geographic grid; calculating a seasonal cycle requires detrending the time series before assessing the mean anomaly for each day or month of the year; and calculating a 5-month moving average can prove difficult, particularly if the data are not available at regular temporal intervals.

With the proper tools in hand, the focus is fully on the physics rather than on the coding.

A well-designed Earth science toolbox would address this problem with three simple functions designed to work together: one function to properly compute spatial averages from a georeferenced grid, another to remove seasonal cycles, and a third to calculate moving averages. Each function would be fully documented on its own, with its methods described alongside explanations of when to use the function and how to interpret its results. With the proper tools in hand, calculating the El Niño–Southern Oscillation index would then become a three-step endeavor in which the focus is fully on the physics rather than on the coding. But for this kind of solution to manifest, we will need buy-in from the community and from funding institutions.

Code-Sharing Requirements Aren’t Working

Until now, attempts to address the issues of transparency and replicability in science have been broadly aimed at encouraging scientists to share their code, but that approach has come up short. Putting the onus on individuals to share scripts developed for specific research projects has created a landscape of piecemeal, untested, poorly documented code and has ignored the underlying structural problem at hand.

Without standard toolboxes of well-trusted, generalized functions for common types of data analysis, it is unreasonable to expect every individual to voluntarily develop, validate, share, and effectively communicate all of their code. So rather than continuing the quixotic insistence that scripts must be uploaded with every publication, we should focus on developing standard, citable toolboxes that the community can come to know and trust.

A More Effective Way to Encourage Code Sharing

We must not conflate effort with efficacy.

Although it may feel too easy to let scientists’ responsibility end at citing which toolboxes were used in an analysis, we must not conflate effort with efficacy. We must instead recognize that only by providing standardized frameworks for clear and streamlined analyses can we expect individuals to become more willing to place their code in the public eye. Thus, by shifting the focus away from simply requiring that authors make statements about code availability to addressing the underlying issues that currently stand in the way of code sharing, we may find that code becomes more reliable and gets shared more frequently.

In Earth science and in the hot-button field of climate science in particular, we need transparency, replicability, and tools that will enable any interested party to see and understand how we come to our findings. For efficiency in science, we need standardized tools that we can all use to move forward rather than repeatedly wasting time reinventing the wheel. And for students who are still developing their skills on many fronts, we need holistically designed toolboxes to let them focus on learning, rather than just tinkering with code until things seem to work.

—Chad A. Greene ([email protected]; @chadagreene), Institute for Geophysics, Jackson School of Geosciences, University of Texas at Austin; and Kaustubh Thirumalai (@holy_kau), Department of Earth, Environmental and Planetary Sciences, Brown University, Providence, R.I.

Citation:

Greene, C. A.,Thirumalai, K. (2019), It’s time to shift emphasis away from code sharing, Eos, 100, https://doi.org/10.1029/2019EO116357. Published on 20 February 2019.

Text © 2019. The authors. CC BY 3.0

Except where otherwise noted, images are subject to copyright. Any reuse without express permission from the copyright owner is prohibited.