More than 50 years ago, a fundamental scientific revolution occurred, sparked by the concurrent emergence of a huge amount of new data on seafloor bathymetry and profound intellectual insights from researchers rethinking conventional wisdom. Data and insight combined to produce the paradigm of plate tectonics. Similarly, in the coming decade, a new revolution in data analytics may rapidly overhaul how we derive knowledge from data in the geosciences. Two interrelated elements will be central in this process: artificial intelligence (AI, including machine learning methods as a subset) and high-performance computing (HPC).

Already today, geoscientists must understand modern tools of data analytics and the hardware on which they work. Now AI and HPC, along with cloud computing and interactive programming languages, are becoming essential tools for geoscientists. Here we discuss the current state of AI and HPC in Earth science and anticipate future trends that will shape applications of these developing technologies in the field. We also propose that it is time to rethink graduate and professional education to account for and capitalize on these quickly emerging tools.

Work in Progress

Great strides in AI capabilities, including speech and facial recognition, have been made over the past decade, but the origins of these capabilities date back much further. In 1971, the U.S. Defense Advanced Research Projects Agency substantially funded a project called Speech Understanding Research, and it was generally believed at the time that artificial speech recognition was just around the corner. We know now that this was not the case, as today’s speech and writing recognition capabilities emerged only as a result of both vastly increased computing power and conceptual breakthroughs such as the use of multilayered neural networks, which mimic the biological structure of the brain.

Artificial intelligence (AI) and many other artificial computing tools are still in their infancy, which has important implications for high-performance computing (HPC) in the geosciences.

Recently, AI has gained the ability to create images of artificial faces that humans cannot distinguish from real ones by using generative adversarial networks (GANs). These networks combine two neural networks, one that produces a model and a second one that tries to discriminate the generated model from the real one. Scientists have now started to use GANs to generate artificial geoscientific data sets.

These and other advances are striking, yet AI and many other artificial computing tools are still in their infancy. We cannot predict what AI will be able to do 20–30 years from now, but a survey of existing AI applications recently showed that computing power is the key when targeting practical applications today. The fact that AI is still in its early stages has important implications for HPC in the geosciences. Currently, geoscientific HPC studies have been dominated by large-scale time-dependent numerical simulations that use physical observations to generate models [Morra et al, 2021a]. In the future, however, we may work in the other direction—Earth, ocean, and atmospheric simulations may feed large AI systems that in turn produce artificial data sets that allow geoscientific investigations, such as Destination Earth, for which collected data are insufficient.

Data-Centric Geosciences

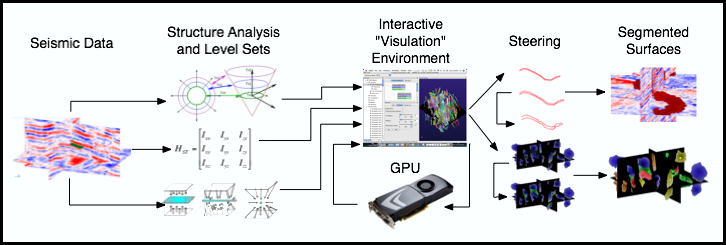

Development of AI capabilities is well underway in certain geoscience disciplines. For a decade now [Ma et al., 2019], remote sensing operations have been using convolutional neural networks (CNNs), a kind of neural network that adaptively learns which features to look at in a data set. In seismology (Figure 1), pattern recognition is the most common application of machine learning (ML), and recently, CNNs have been trained to find patterns in seismic data [Kong et al., 2019], leading to discoveries such as previously unrecognized seismic events [Bergen et al., 2019].

New AI applications and technologies are also emerging; these involve, for example, the self-ordering of seismic waveforms to detect structural anomalies in the deep mantle [Kim et al., 2020]. Recently, deep generative models, which are based on neural networks, have shown impressive capabilities in modeling complex natural signals, with the most promising applications in autoencoders and GANs (e.g., for generating images from data).

CNNs are a form of supervised machine learning (SML), meaning that before they are applied for their intended use, they are first trained to find prespecified patterns in labeled data sets and to check their accuracy against an answer key. Training a neural network using SML requires large, well-labeled data sets as well as massive computing power. Massive computing power, in turn, requires massive amounts of electricity, such that the energy demand of modern AI models is doubling every 3.4 months and causing a large and growing carbon footprint.

AI is starting to improve the efficiency of geophysical sensors: Some sensors use AI to detect when “interesting” data are recorded, and these data are selectively stored.

In the future, the trend in geoscientific applications of AI might shift from using bigger CNNs to using more scalable algorithms that can improve performance with less training data and fewer computing resources. Alternative strategies will likely involve less energy-intensive neural networks, such as spiking neural networks, which reduce data inputs by analyzing discrete events rather than continuous data streams.

Unsupervised ML (UML), in which an algorithm identifies patterns on its own rather than searching for a user-specified pattern, is another alternative to data-hungry SML. One type of UML identifies unique features in a data set to allow users to discover anomalies of interest (e.g., evidence of hidden geothermal resources in seismic data) and to distinguish trends of interest (e.g., rapidly versus slowly declining production from oil and gas wells based on production rate transients) [Vesselinov et al., 2019].

AI is also starting to improve the efficiency of geophysical sensors. Data storage limitations require instruments such as seismic stations, acoustic sensors, infrared cameras, and remote sensors to record and save data sets that are much smaller than the total amount of data they measure. Some sensors use AI to detect when “interesting” data are recorded, and these data are selectively stored. Sensor-based AI algorithms also help minimize energy consumption by and prolong the life of sensors located in remote regions, which are difficult to service and often powered by a single solar panel. These techniques include quantized CNN (using 8-bit variables) running on minimal hardware, such as Raspberry Pi [Wilkes et al., 2017].

Advances in Computing Architectures

Powerful, efficient algorithms and software represent only one part of the data revolution; the hardware and networks that we use to process and store data have evolved significantly as well.

Since about 2004, when the increase in frequencies at which processors operate stalled at about 3 gigahertz (the end of Moore’s law), computing power has been augmented by increasing the number of cores per CPU and by the parallel work of cores in multiple CPUs, as in computing clusters.

Accelerators such as graphics processing units (GPUs), once used mostly for video games, are now routinely used for AI applications and are at the heart of all major ML facilities (as well the U.S. Exascale Strategy, a part of the National Strategic Computing Initiative). For example, Summit and Sierra, the two fastest supercomputers in the United States, are based on a hierarchical CPU-GPU architecture. Meanwhile, emerging tensor processing units, which were developed specifically for matrix-based operations, excel at the most demanding tasks of most neural network algorithms. In the future, computers will likely become increasingly heterogeneous, with a single system combining several types of processors, including specialized ML coprocessors (e.g., Cerebras) and quantum computing processors.

Computational systems that are physically distributed across remote locations and used on demand, usually called cloud computing, are also becoming more common, although these systems impose limitations on the code that can be run on them. For example, cloud infrastructures, in contrast to centralized HPC clusters and supercomputers, are not designed for performing large-scale parallel simulations. Cloud infrastructures face limitations on high-throughput interconnectivity, and the synchronization needed to help multiple computing nodes coordinate tasks is substantially more difficult to achieve for physically remote clusters. Although several cloud-based computing providers are now investing in high-throughput interconnectivity, the problem of synchronization will likely remain for the foreseeable future.

Boosting 3D Simulations

AI has proven invaluable in discovering and analyzing patterns in large, real-world data sets. It could also become a source of realistic artificial data sets.

Artificial intelligence has proven invaluable in discovering and analyzing patterns in large, real-world data sets. It could also become a source of realistic artificial data sets, generated through models and simulations. Artificial data sets enable geophysicists to examine problems that are unwieldy or intractable using real-world data—because these data may be too costly or technically demanding to obtain—and to explore what-if scenarios or interconnected physical phenomena in isolation. For example, simulations could generate artificial data to help study seismic wave propagation; large-scale geodynamics; or flows of water, oil, and carbon dioxide through rock formations to assist in energy extraction and storage.

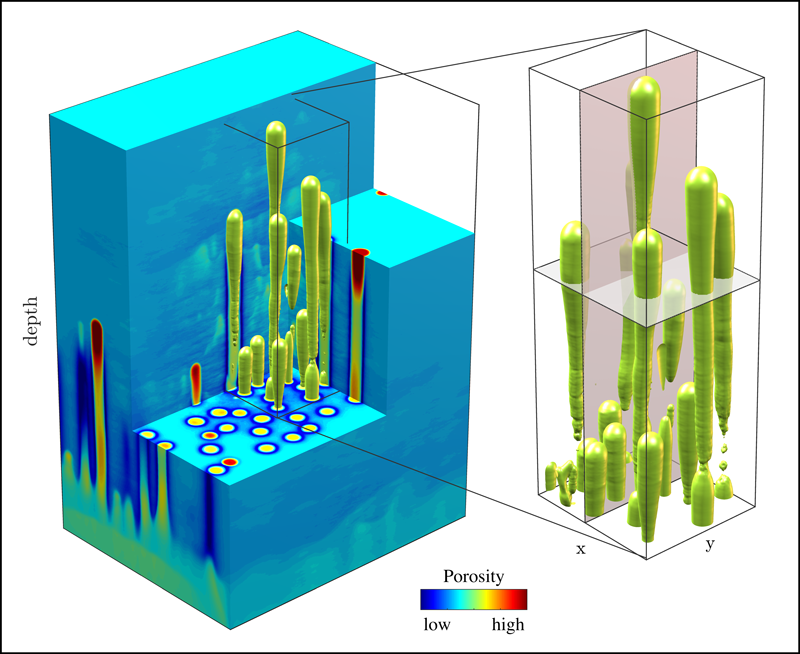

HPC and cloud computing will help produce and run 3D models, not only assisting in improved visualization of natural processes but also allowing for investigation of processes that can’t be adequately studied with 2D modeling. In geodynamics, for example, using 2D modeling makes it difficult to calculate 3D phenomena like toroidal flow and vorticity because flow patterns are radically different in 3D. Meanwhile, phenomena like crustal porosity waves (waves of high porosity in rocks; Figure 2) and corridors of fast-moving ice in glaciers require extremely high spatial and temporal resolutions in 3D to capture [Räss et al., 2020].

Adding an additional dimension to a model can require a significant increase in the amount of data processed. For example, in exploration seismology, going from a 2D to a 3D simulation involves a transition from requiring three-dimensional data (i.e., source, receiver, time) to five-dimensional data (source x, source y, receiver x, receiver y, and time [e.g., Witte et al., 2020]). AI can help with this transition. At the global scale, for example, the assimilation of 3D simulations in iterative full-waveform inversions for seismic imaging was performed recently with limited real-world data sets, employing AI techniques to maximize the amount of information extracted from seismic traces while maintaining the high quality of the data [Lei et al., 2020].

Emerging Methods and Enhancing Education

Interactive programming and language-agnostic programming environments are young techniques that will facilitate introducing computing to geoscientists.

As far as we’ve come in developing AI for uses in geoscientific research, there is plenty of room for growth in the algorithms and computing infrastructure already mentioned, as well as in other developing technologies. For example, interactive programming, in which the programmer develops new code while a program is active, and language-agnostic programming environments that can run code in a variety of languages are young techniques that will facilitate introducing computing to geoscientists.

Programming languages, such as Python and Julia, which are now being taught to Earth science students, will accompany the transition to these new methods and will be used in interactive environments such as the Jupyter Notebook. Julia was shown recently to perform well as compiled code for machine learning algorithms in its most recent implementations, such as the ones using differentiable programming, which reduces computational resource and energy requirements.

Quantum computing, which uses the quantum states of atoms rather than streams of electrons to transmit data, is another promising development that is still in its infancy but that may lead to the next major scientific revolution. It is forecast that by the end of this decade, quantum computers will be applied in solving many scientific problems, including those related to wave propagation, crustal stresses, atmospheric simulations, and other topics in the geosciences. With competition from China in developing quantum technologies and AI, quantum computing and quantum information applications may become darlings of major funding opportunities, offering the means for ambitious geophysicists to pursue fundamental research.

Taking advantage of these new capabilities will, of course, require geoscientists who know how to use them. Today, many geoscientists face enormous pressure to requalify themselves for a rapidly changing job market and to keep pace with the growing complexity of computational technologies. Academia, meanwhile, faces the demanding task of designing innovative training to help students and others adapt to market conditions, although finding professionals who can teach these courses is challenging because they are in high demand in the private sector. However, such teaching opportunities could provide a point of entry for young scientists specializing in computer science or part-time positions for professionals retired from industry or national labs [Morra et al., 2021b].

The coming decade will see a rapid revolution in data analytics that will significantly affect the processing and flow of information in the geosciences. Artificial intelligence and high-performance computing are the two central elements shaping this new landscape. Students and professionals in the geosciences will need new forms of education enabling them to rapidly learn the modern tools of data analytics and predictive modeling. If done well, the concurrence of these new tools and a workforce primed to capitalize on them could lead to new paradigm-shifting insights that, much as the plate tectonic revolution did, help us address major geoscientific questions in the future.

Acknowledgments

The listed authors thank Peter Gerstoft, Scripps Institution of Oceanography, University of California, San Diego; Henry M. Tufo, University of Colorado Boulder; and David A. Yuen, Columbia University and Ocean University of China, Qingdao, who contributed equally to the writing of this article.

References

Bergen, K. J., et al. (2019), Machine learning for data-driven discovery in solid Earth geoscience, Science, 363(6433), eaau0323, https://doi.org/10.1126/science.aau0323.

Kim, D., et al. (2020), Sequencing seismograms: A panoptic view of scattering in the core-mantle boundary region, Science, 368(6496), 1,223–1,228, https://doi.org/10.1126/science.aba8972.

Kong, Q., et al. (2019), Machine learning in seismology: Turning data into insights, Seismol. Res. Lett., 90(1), 3–14, https://doi.org/10.1785/0220180259.

Lei, W., et al. (2020), Global adjoint tomography—Model GLAD-M25, Geophys. J. Int., 223(1), 1–21, https://doi.org/10.1093/gji/ggaa253.

Ma, L., et al. (2019), Deep learning in remote sensing applications: A meta-analysis and review, ISPRS J. Photogramm. Remote Sens., 152, 166–177, https://doi.org/10.1016/j.isprsjprs.2019.04.015.

Morra, G., et al. (2021a), Fresh outlook on numerical methods for geodynamics. Part 1: Introduction and modeling, in Encyclopedia of Geology, 2nd ed., edited by D. Alderton and S. A. Elias, pp. 826–840, Academic, Cambridge, Mass., https://doi.org/10.1016/B978-0-08-102908-4.00110-7.

Morra, G., et al. (2021b), Fresh outlook on numerical methods for geodynamics. Part 2: Big data, HPC, education, in Encyclopedia of Geology, 2nd ed., edited by D. Alderton and S. A. Elias, pp. 841–855, Academic, Cambridge, Mass., https://doi.org/10.1016/B978-0-08-102908-4.00111-9.

Räss, L., N. S. C. Simon, and Y. Y. Podladchikov (2018), Spontaneous formation of fluid escape pipes from subsurface reservoirs, Sci. Rep., 8, 11116, https://doi.org/10.1038/s41598-018-29485-5.

Räss, L., et al. (2020), Modelling thermomechanical ice deformation using an implicit pseudo-transient method (FastICE v1.0) based on graphical processing units (GPUs), Geosci. Model Dev., 13, 955–976, https://doi.org/10.5194/gmd-13-955-2020.

Vesselinov, V. V., et al. (2019), Unsupervised machine learning based on non-negative tensor factorization for analyzing reactive-mixing, J. Comput. Phys., 395, 85–104, https://doi.org/10.1016/j.jcp.2019.05.039.

Wilkes, T. C., et al. (2017), A low-cost smartphone sensor-based UV camera for volcanic SO2 emission measurements, Remote Sens., 9(1), 27, https://doi.org/10.3390/rs9010027.

Witte, P. A., et al. (2020), An event-driven approach to serverless seismic imaging in the cloud, IEEE Trans. Parallel Distrib. Syst., 31, 2,032–2,049, https://doi.org/10.1109/TPDS.2020.2982626.

Author Information

Gabriele Morra ([email protected]), University of Louisiana at Lafayette; Ebru Bozdag, Colorado School of Mines, Golden; Matt Knepley, State University of New York at Buffalo; Ludovic Räss, ETH Zürich, Switzerland; and Velimir Vesselinov, Los Alamos National Laboratory, N.M.

Citation:

Morra, G., E. Bozdag, M. Knepley, L. Räss, and V. Vesselinov (2021), A tectonic shift in analytics and computing is coming, Eos, 102, https://doi.org/10.1029/2021EO159258. Published on 04 June 2021.

Text © 2021. The authors. CC BY-NC-ND 3.0

Except where otherwise noted, images are subject to copyright. Any reuse without express permission from the copyright owner is prohibited.