Imagine a tropical reef in which the constant clatter of snapping shrimp overwhelms the soundscape. Artisanal fishing boats putter overhead as dolphins whistle and splash. In another scene described by Kate Stafford, an oceanographer at Oregon State University, the setting is Arctic winter, and the creaking and groaning sounds of ice are interrupted by vocal marine mammals—the moan of a bowhead whale or the knocking and ringing of a male walrus ready to mate. What you hear in these acoustic environments depends on where you are and what time of year it is, Stafford said.

Noise permeates the seas. Earth’s abiotic sounds include earthquakes and volcanoes, as well as winds and ice. Biologic sounds come from marine mammals, fish, and invertebrates. Added to the natural oceanic cacophony is the “anthrophony” of human-driven activity, including the pings of sonar systems mapping the ocean floor, the din of oil and gas exploration, and the roar of ships transporting goods between continents.

“If we don’t understand the environment itself, how can we understand how animals interact with it?”

When scientists listen with sensors placed throughout the oceans, Stafford said, “it can seem quite loud and chaotic.” Marine wildlife like whales, dolphins, and many fish “are not visual like most of us humans are,” Stafford said, and “largely rely on their sense of hearing to navigate their world.” Adding persistent, loud artificial noise makes it challenging for them to conduct their lives.

As the global ocean warms, hydroacoustics—the science of sound in water—can help scientists play the role of physicians with stethoscopes, listening for signs of ecosystem health and ocean temperature changes, according to Kyle Becker, a program officer at the Office of Naval Research. “If we don’t understand the environment itself,” he asked, “how can we understand how animals interact with it?”

Sound Goes SOFAR

The ocean’s interior is actually easier to hear than to see. The reason is that in the ocean, light doesn’t travel very far, but sound—a compressional wave—can travel without losing much energy, explained Robert Dziak, a research oceanographer at NOAA’s Pacific Marine Environmental Laboratory.

Different wave frequencies, or pitches, travel different distances. Low-frequency sounds, like the deep tones of an upright bass in an orchestra, can travel far. High-frequency noises, like the bright, lilting violin’s song, will dissipate more rapidly.

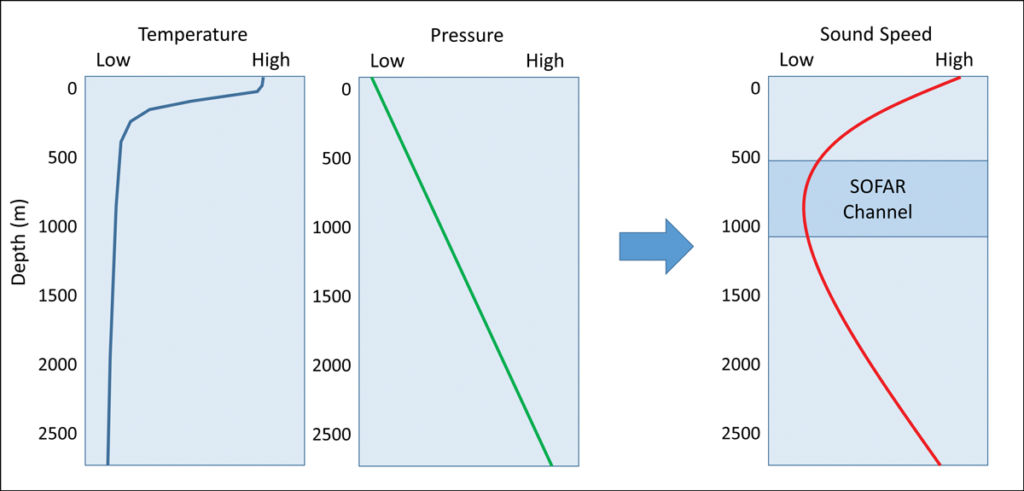

Ocean sound speed is affected by temperature, pressure, and, to a lesser degree, salinity, Stafford said.

Temperature decreases with depth to a point where it remains cold but stable; there, sound speed is at a minimum. At greater depths, increasing pressure speeds up sound. The low-velocity layer forms the axis of the sound fixing and ranging (SOFAR) channel.

Sound waves—especially low-frequency ones—can get caught in this layer because refraction bends the waves toward this zone of minimum sound speed. As a result, sound waves can travel thousands of kilometers without losing much acoustic energy, Stafford said.

Among the first to take advantage of the SOFAR channel was the U.S. Navy: During World War II it experimented with detonating SOFAR bombs in the channel to triangulate the location of downed pilots.

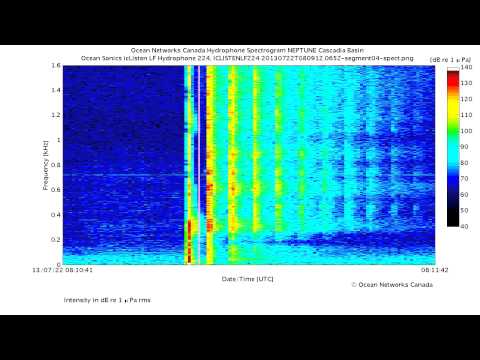

Scientists use underwater microphones to hear and locate ocean sounds inside and outside the SOFAR channel. These sensors, called hydrophones, can detect a range of frequencies, some of which are inaudible to people. To find the source of a sound, a single hydrophone won’t suffice, said Sara Bazin, a researcher at Institut Universitaire Européen de la Mer. Three or more are needed to triangulate its origin, she added.

To hear distant sources, said Stafford, “you want your hydrophones in the SOFAR channel, [and] if you are more interested in local events…you put [them] on the seafloor.” Because the SOFAR channel is highly temperature dependent, its depth varies with both season and latitude. In the Arctic, it’s very shallow, whereas in tropical regions, it’s closer to 1,000 meters deep, Dziak said.

Installing a hydrophone in the SOFAR channel requires information about how temperature and salinity, which is directly related to conductivity, vary with depth. A CTD (conductivity, temperature, and depth) device can measure the requisite vertical profiles to find the channel, Bazin said. The hydrophone is then placed in a buoy that’s moored to the seafloor with a rope and anchor. Often installed in remote waters, moored hydrophones are designed to last months to years before a team must recover the instrument, collect the data, and redeploy the hydrophone.

Hydrophones can be linked to land by cables for near-real-time transmission of data, said Morgane Le Saout, a postdoctoral researcher at GEOMAR Helmholtz Centre for Ocean Research in Kiel, Germany.

The International Monitoring System, maintained by the Vienna-based Comprehensive Nuclear-Test-Ban Treaty Organization, or CTBTO, listens for underwater nuclear explosions primarily using hydrophones suspended in the SOFAR channel that are tethered to six onshore stations for satellite transmission, said Mario Zampolli, a hydroacoustic engineer at CTBTO.

“This data set, which has been listening to all sorts of [phenomena] in the ocean, atmosphere and solid Earth for [more than] 20 years,” Zampolli said, “can reveal many things about Earth processes.”

Indeed, the CTBTO data set, which is one of the longest time series of hydroacoustic data, can be made accessible to researchers who have relevant scientific questions, Stafford said.

Listening to Earth

To understand abiotic processes in the ocean environment, hydrophone arrays keep their ears on the seafloor, water column, and ocean surface. For example, in 2016, Dziak and colleagues heard the breakup of the Nansen ice shelf in the Ross Sea via hydrophones suspended near the SOFAR channel about 2 months before satellite images caught the cracking. “It broke free, but it remained pinned in place,” Dziak said, “until a big storm system came through.”

At the seafloor, ocean bottom seismometers can sense vibrations caused by earthquakes, Le Saout said. And hydrophones can hear these rumblings when the seismic waves transition from crust to water, turning into hydroacoustic signals.

For instance, hydrophones heard the magnitude 9.0 Great Thoku earthquake, which struck offshore Japan in 2011. “It was incredibly loud and projected a huge amount of sound energy into the ocean,” Dziak said.

Unlike earthquakes, which produce long, low-frequency signals, volcanic eruptions often produce short-pulsed signatures, Le Saout said, allowing listeners to distinguish these sounds. A 2015 eruption of the Axial Seamount on the Juan de Fuca Ridge produced tens of thousands of impulsive acoustic signals generated by the interaction of lava and seawater, she said.

In another example of scientific use of hydrophones, French research institutions are monitoring a growing submarine volcano that in 2018 unexpectedly sprouted off the coast of Mayotte, a small French-administered island in the western Indian Ocean. Bazin listens for the volcano’s burbling submarine lava with four hydrophones deployed in the SOFAR channel within 50 kilometers of the new volcano. A second array of hydrophones installed throughout the Indian Ocean has also heard similar lava-water interactions coming from the Indian mid-ocean ridges. Such eruption signals, Bazin said, “look very similar whether we record them 50 kilometers or 3,000 kilometers away,” thanks to the SOFAR channel.

As earthquakes and eruptions create signals for hydroacoustic stations to hear, so, too, do whales. At the same time as one of the large earthquakes near Mayotte, “there was a whale singing near [one hydrophone],” Bazin said.

“When whales are relatively nearby or very loud, you can distinguish the songs [of individuals],” Zampolli said. Whale song even helped researchers identify a new subspecies of blue whale in the Indian Ocean, he said.

Like people, marine mammals likely hear in the same range that they vocalize, Stafford said. In fact, some large whales may be able to take advantage of the SOFAR channel because they vocalize with low-frequency signals. “It doesn’t mean that a blue whale off California says, ‘hey, buddy,’ and that’s going to be heard by a whale off Japan,” she said, but the vocalization can alert the whale in Japan to head in the direction of its California counterpart. “It’s more of a navigation beacon versus an actual conversation.”

Sonar Sources Map the Seafloor

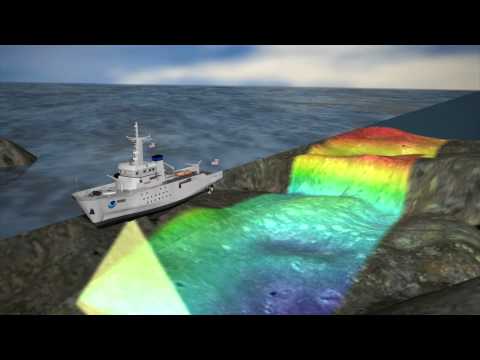

By passively listening, scientists can learn about a variety of ocean processes and ecosystems, but they sometimes make their own sounds via active acoustics. For instance, single-beam sonar systems can help boats determine depth, Dziak said.

In such systems, a voltage excites a round ceramic device, creating a pressure wave—a downgoing ping, said Larry Mayer, director of the Center for Coastal and Ocean Mapping at the University of New Hampshire. That sound bounces off the seafloor and returns, at a lower amplitude, to the same ceramic surface, creating a measurable voltage. With knowledge of ocean sound speed, time can be converted to depth, and boats can avoid running aground.

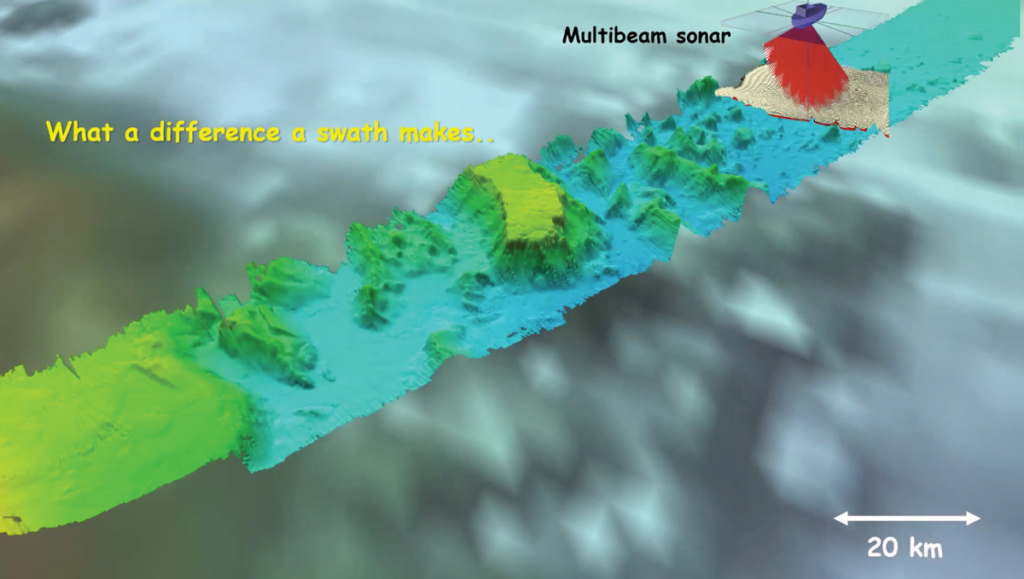

This method was how oceanographers mapped the seafloor through much of the past century. Then, multibeam sonar systems were introduced with numerous ceramic elements placed in complex arrays, Mayer explained. The acoustic source creates a narrow band of sound, like a flashlight with a thin, rectangular slit. A different set of ceramics perpendicular to the source measures the return signal, he explained. With some signal processing, the result is a detailed picture of cracks, crevasses, mountains, and sediments on the seafloor.

Multibeam sonar “was this absolute revolution in the way we look at the seafloor,” Mayer said. Still, only about 25% of the ocean has been mapped in great detail via multibeam sonar. (Bathymetry for the other 75% comes from satellite data, which is far less accurate and, in some cases, hundreds of meters off.)

The U.S. Navy uses sonar to spot stealthy submarines. “That’s where midfrequency sonars come into play,” Becker said.

Mid-frequency Navy sonar, Mayer explained, operates at the same decibel level as multibeam sonar used for mapping, but to find objects throughout the water rather than just on the seafloor, its pulses go out in almost all directions. The pulses are also much longer—seconds of sound versus milliseconds—and the overall amount of energy emitted is much greater, he said.

Sonar used for depth sounding and fish finding operates at high frequencies and low amplitudes that aren’t likely to affect many marine species, Stafford said. However, she continued, midfrequency sonar “has been implicated in beached whale strandings around the world.” Whether strandings result from “scaring” whales, causing them to surface quickly and get the bends (decompression sickness caused by rapid decrease of water pressure), or from some other aspect of the military exercise, is unclear, she said.

To test the relationship between mid-frequency sonar and whale behavior, the Navy monitored Cuvier’s beaked whales at the Southern California Anti-Submarine Warfare Range. When the whales were feeding during Navy sonar transmission, the noise interrupted their behavior, and in some cases, the whales left the range for several days, Mayer said. “There was a clear, direct impact.”

To see whether multibeam sonar systems have the same effect, Mayer and his team brought their loudest multibeam sonar—one that produces low frequencies that can map down to 11,000 meters below sea level—to the same range to repeat the same experiment. They found no change in the feeding behavior of the same population of whales that initially fled the range.

Anthropogenic Air Guns, Commercial Cacophony

Large whales sing at the same frequency as the sounds of commercial shipping and oil and gas exploration. When the frequency of a human-made sound overlaps with that of marine animals, Stafford said, animals’ ability to hear predators or navigate is affected. “That’s problematic.”

Yet both oil and gas exploration surveys and geologists who study the oceanic crust to a depth of several kilometers need very large air gun surveys, Bazin said. For instance, mapping underground details of Mayotte’s new volcano required geologists to tow air guns behind a ship, using their shots as seismic sources.

“Noise for certain people is actually the source [of data] for another type of researcher.”

But the air gun surveys near Mayotte also masked the sounds of subsea lava emerging from the new volcano, hiding the sounds Bazin was seeking. “Noise for certain people is actually the source [of data] for another type of researcher,” she said.

Air guns produce a pulse of high energy across many low frequencies, Dziak said, which ensures that the signals get to the seafloor and then into the crust to bounce off geologic structures. “There’s a lot of oil exploration going on in the world,” Dziak said. “There’s periods where you see lots and lots of air gun noise.”

Only intermittent topographic features of the seafloor, said Bazin, keep these sounds from traveling long distances through the SOFAR channel and into receivers, whether they be hydrophones or whales’ ears.

Hydrophones (and probably also whales) around the world hear the constant hum of commercial shipping, as well as air guns, Stafford said. To explore shipping noise over time at a global scale, Jukka-Pekka Jalkanen, a researcher at the Finnish Meteorological Institute, and his team combined transponder data and vessel descriptions for the global fleet, tracking where each ship went. To convert that information into shipping noise, they applied a noise model that predicts noise emissions for individual ships, he said. The team found that container ships are the largest contributor to shipping noise, mostly because they are large vessels that go fast.

One major culprit is cavitation, a process that describes the formation and collapse of bubbles. Ship propellers form bubbles as they rotate, and often have imperfections that yield more bubbles. Faster ships make more bubbles. When the bubbles break it’s noisy, Stafford said. But machinery clunking into the water and improperly secured engines also contribute to shipping noise. “A really well built ship will be a quieter ship,” she said.

At the same time that technology is improving ship quality, which would reduce associated noise like cavitation, the total amount of shipping is increasing. Jalkanen likened this to buying a fuel-efficient car and driving it more often than the gas-guzzler you previously owned.

In the short term, changes in shipping activity such as slowing down and avoiding marine protected areas can help protect marine ecosystems from excess noise, Jalkanen said. Long-term changes necessitate both modifying ship design and retrofitting existing ships. At the moment, however, there are no legal obligations for noise reduction, though underwater noise is recognized by the European Union as a pollutant.

Sound Speed Takes Center Stage

As the ocean warms, the SOFAR channel deepens, and overall, ocean sound speed increases. With climate change resulting in extreme ocean temperatures, changes in sound speed can affect animals’ ability to feed, migrate, or even mate. “Almost all marine mammals that we know of rely on sound as their primary modality,” Stafford said.

Cartography of Loss

The study on future ocean sounds has been translated into a performance, “Cartography of Loss,” choreographed by Giulia Bean and performed by Chiara Nadalutti. The dance turns sound speed profiles, which are just numbers, into movement. In the piece, half of the dancer’s body moves according to today’s profile as her other half demonstrates the predicted future profile. “Even if you are not used to reading a graph, you can look at the dancer and see her moving very differently for present and future,” Affatati said.

From this literal stage, the ocean acoustic environment and its many actors and scenes might find a new audience.

A study modeling ocean sound speed under a business-as-usual climate change scenario suggests that increases aren’t likely to be uniform, said Chiara Scaini, a researcher at the Istituto Nazionale di Oceanografia e di Geofisica Sperimentale in Trieste, Italy, and coauthor of the modeling study. In this scenario, researchers have identified certain acoustic hot spots predicted to substantially up their sound speeds.

To demonstrate how these changes might affect mammals who live in one such hot spot in the northern Atlantic, Scaini and her colleagues focused on vocalizations of the critically endangered North Atlantic right whale. The whales’ calls should propagate farther in a warmer ocean, said Alice Affatati, the lead author of the study, also at the Istituto Nazionale di Oceanografia e di Geofisica Sperimentale. But sounds in general would propagate faster in a warm ocean, including anthropogenic noise that might be more swiftly transported around the ocean via changes in the SOFAR channel. In other words, the background noise level may increase, making it harder for whales and other marine wildlife to hear each other.

Taking the Ocean’s Temperature

Warming waters are also forcing species to change where they go and when. Hydrophones can hear fish and marine mammals moving farther north into the Arctic than they used to, Stafford said. The instruments can also directly help gauge ocean temperature increases resulting from climate change.

The ocean acts like a battery that stores heat, Zampolli said, so small increases in temperature over a large volume can mean big changes in the amount of energy stored. Keeping track of the heat helps scientists see some of the effects of climate change and learn how marine life responds. Satellites can monitor temperatures at the surface. Underwater instruments, known as Argo floats, descend to depths of 2,000 meters, drift for 10 days, and then resurface, sending vertical profiles of temperature via satellite. “But [Argo floats are] always a point sample, and they are spaced a few hundred kilometers apart.”

To measure temperature variations over time at high resolution across immense volumes of ocean, acoustic tomography shows great promise, Zampolli said. If an acoustic source is known precisely in both space and time, its propagation path is well understood, and the source sends its sound signal via the SOFAR channel for measurement at distant hydrophones; then repeated measurements of the signal’s travel time over years will yield information about long-term changes in sound speed and therefore changes in average ocean temperature along the propagation path. The hotter the water is, the faster the signal propagates.

However, the history of this method is fraught. The main project that ran between 1996 and 2006, called Acoustic Thermometry of Ocean Climate, or ATOC, was designed to send low-frequency signals for 20 minutes every 4 hours through the northern Pacific basin. Because of early concerns over the effect of these sound sources on marine mammals that vocalize in the same frequencies, ATOC was eventually defunded.

Now, scientists are finding other acoustic tomography methods to safely study the ocean’s temperatures by using either natural sources, such as repeating earthquakes, or very low level human-produced sounds that are transmitted in a particular sequence. Nevertheless, a noisy ocean can potentially decrease the detectability of these sometimes subtle acoustic tomography signals.

“There’s a lost couple of decades,” Becker said, because acoustic tomography was taken off the table. “I don’t know of any other way you can synoptically probe the interior of the ocean on basin scales.”

“The human cry that was raised about [ATOC] and about the use of Navy sonar actually ended up driving a tremendous amount of research to figure out how animals make sound and the impacts of sound on animals,” Stafford said. ATOC taught scientists a lot about doing experiments responsibly, she said, and served as a reminder that “we’re not the only creatures on the planet.”

—Alka Tripathy-Lang (@DrAlkaTrip), Science Writer